Hi Guys,

Today as promised we will talk about an important feauture on the columnstore indexes called Grouped Aggregate Pushdown.

We already talked about this feature two post ago here:SQL Server, the "Group By" competition: from 30 seconds to 56 milliseconds

We have seen that a columnstore index can return directly already filtered data so this feature is very powerful!

I want to repeat the official definition because in my opinion it is very beautiful:

Some aggregate computations can be pushed to the scan node instead of calculated in an aggregate node.

Where is the problem?

Activating this function is not really trivial!

Let's see, even with the help of a few examples, how to do it.

Enjoy the reading mate!

"Summary of previous episodes".. let's recap!

In the example we had done in the previous post we had run the following command:

SELECT Classifiers

FROM Movements

GROUP BY Classifiers

A simple T-SQL command with a GROUP BY clause and where there is a columnstore index on the Classifiers column.

This was its execution plan:

At this point we had used a trick to activate the grouped Aggregate Pushdown: we added a COUNT function.

So, our query became:

SELECT Classifiers, COUNT(Classifiers)

FROM Movements

GROUP BY Classifiers

By running our command again we got this execution plan:

The execution time had been drastically reduced!

The Grouped Aggregate Pushdown feature

Let's start from the beginning.

Aggregate Pushdown is a feature added with SQL Server 2016 as an optimization for aggregate queries against columnstore index.

Aggregate Pushdown is possible both with or without using a GROUP BY clause, although today we will talk only about this first case.

When pushdown is successful, aggregation is performed within the columnstore Scan operator itself. Possibly operating directly on compressed data and using SIMD CPU instructions.

However there are some conditions must be met for the pushdown to be activated.

Since pushdown aggregate apply only to compressed data you need to know some about columnstore compression algorithm.

Compression algorithm

As anticipated, pushdown aggregate apply only to compressed data: not to the rows in a delta store.

Data is compressed for more performance and compression occurs in different ways depending on the data.

There are two phases.

A first phase called encoding can be followed by a second phase called compression.

During the first phase, encoding can be done by value (Value encoding) where the number of bits is reduced through translation and multiplication operations or through a dictionary (dictionary encoding).

In this case, a dictionary is created that contains the unique values read from the data.

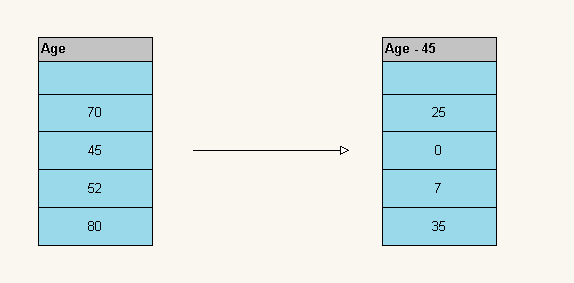

Value Encoding example.

Simply subtracting 45 we can store data using 6 bits instead of 7.

Dictionary Encoding

Each distinct Value ("A","B",...) is stored into a dictionary. Values are replaces with the classifiers id.

A second phase can follow.

Segment data may be further compressed using a RLE (run-length encoding) and bit-packing algorithms.

RLE

Repeating values are replaced with the data and the number of repeats:

Bit-Packing

Store an array of numbers, for example (2,1,5) , in binary (00000010,00000001,00000101) into a shorter form. Storing the maximum number (5) require 3 bit. All the others number are stored using 3 bit. Doing so there is no need to add spaces between numbers.

What conditions must be met to activate the Grouped Aggregate Pushdown?

These are the rules:

- As stated above data must be compressed.

- There must be no possibility of aggregate overflow. For example this could be a risk for the SUM aggregate, however there is no risk using the COUNT function.

- Important: If Bit-packed is used grouping keys should be no longer than 10 bits. RLE has no problems.

Let's analyze our Query!

Let's go now to analyze our Query.

We choose the same query and with the same data used in the previous post:

SELECT Classifiers, COUNT(Classifiers)

FROM Movements

GROUP BY Classifiers

On the classifiers column we add a nonclustered columnstore index:

CREATE COLUMNSTORE INDEX IDX_MOVS_CLASSIFIERS ON MOVS(CLASSIFIERS)

Running the Query we have as a result 4 different classifiers each repeated 1.250.000 times:

Looking at the execution plan we can see that in this case the Aggregate pushdown feature is used.

Yes, but why? ...it's time to dig deeper

Looking to the sys.column_store_row_group table we see that we have 1 rowgroup for each million of rows.

SELECT * FROM sys.column_store_row_groups t WHERE t.OBJECT_ID = OBJECT_ID('movements')

Since we have 50 million of rows we have 50 rowgroup:

To deepen further, looking at the sys.partitions system table, we get the partition_id value

Select P.* From sys.partitions p

where

P.[object_id] = Object_id('movements') AND

P.data_compression_desc = 'COLUMNSTORE'

The value searched is 72057594045071360:

Now we will use the undocumented command DBCC CSINDEX to get a bunch of informations.

You need to specify the partition id and the index_id (the value is 3 from the image above)

DBCC CSINDEX (

'testdb',

72057594045071360, -- OBJECT_ID(N'dbo.Movs', N'U'),

3, -- INDEX_ID

0,1,2,0,2

);

By running this command we can see that the command output is divided into sections.

Segment attribute section

The Segment attribute section shows the encoding type.

Looking at the encodingType label we have a value of 3 which means that dictionary encoding is used.

Possible values for the encodingType field are:

5 = STRING_STORE_BY_VALUE_BASED - string/binary with no dictionary

This make sense because there are many duplicate values.

Segment: (3:0)

Segment Attributes:

Version = 1 encodingType = 3 hasNulls = 0

BaseId = -1 Magnitude = -1.000000e+00 PrimaryDictId = 0

SecondaryDictId = -1 MinDataId = 4 MaxDataId = 7

NullValue = -1 OnDiskSize = 608 RowCount = 1048576

The next section is the RLE Header section

RLE Data is shown and this means that RLE compression is used

RLE Header:

Lob type = 3 RLE Array Count (In terms of Native Units) = 5

RLE Array Entry Size = 8

RLE Data:

Index = 0 Value = 6 Count = 524643

Index = 1 Value = 4 Count = 179857

Index = 2 Value = 7 Count = 177250

Index = 3 Value = 5 Count = 166826

Index = 4 Value = 0 Count = 0

The next section is the Bitpack Data Header section

We have a Bitpack Entry size equal to 2.

This means that only 2 bit are required to store in the dictionary our 4 distinct values 'A',B',C' and 'D'.

Bitpack Data Header:

Bitpack Entry Size = 2 Bitpack Unit Count = 0 Bitpack MinId = 4

Bitpack DataSize = 0

Bitpack Data:

As stated in the previous paragraph: Since bit-pack size (2) is not greater the 10 so: aggregate pushdown in working.

This is why aggregate pushdown works for the query we analyzed.

It is important to note that the activation or not of this functionality depends on the data contained in the table.

For example, if I had had more than 1024 different classifiers we would have had a bitpack entry size greater than 10 bits and therefore the aggregate pushdown feature would not have activated.

Luca Biondi @ SQLServerPerformance blog!

Next post:

Previous post: Ladies and gentlemen ...drumroll... SQL Server 2022 is among us! New features and improvements!