Hi Guys,

Welcome back! Some time ago I was asked what the differences were between the temporary tables and in-memory table variables.

I wrote this post with the intent to clarify! ...I hope I have succeeded.

Today I wanted to present a comparison between temporary tables (#) and variables of type table (@) .

We will analyze in detail the differences between these two types of tables to understand when it is better to use one type rather than another.

But not only that: You will have the opportunity to see how to read the transaction log and use the RML utilities to generate the parallel workload necessary for our analysis.

We use the term "temporary tables" because it often happens in our calculations that we need an "intermediate" table on which to place data.

Due to the fact that our tables are "temporary" after use we will not need to delete them: this is certainly a great convenience.

"Temp Tables" (#) Vs. "Table Variables" (@) ... LOP

To create a temp table, simply generate a table whose name begins with the # character.

Example:

CREATE TABLE #ARTICO (CODICE VARCHAR(80), COLORE VARCHAR(80), POSIZIONE VARCHAR(80))

The second type of table is called a table variable.

It is resident in memory and is created with a different syntax:

DECLARE @ARTICOLO TABLE CODICE VARCHAR(80), COLORE VARCHAR (80), POSIZIONE VARCHAR (80))

Since we made the introduction ....let's go!

Let's now create a test environment on which we will make our considerations.

Let's create an ARTICOLI table with its CLUSTERED index on the ID field:

CREATE TABLE ARTICOLI (ID INT IDENTITY (1,1), CODICE VARCHAR(80), COLORE VARCHAR (80), POSIZIONE VARCHAR (80)) CREATE CLUSTERED INDEX IDX_ARTICOLI_ID on ARTICOLI(ID)

Then let's fill it in with some data.

For example I used this recursive method and that uses a CTE to fill the table with 1000 rows

WITH CTE AS (SELECT 1 AS CODICE, 1 AS DESCR UNION ALL SELECT CODICE + 1, CODICE + 1 AS DESCR FROM CTE WHERE CODICE < 10000) INSERT INTO ARTICOLI (CODICE,COLORE) SELECT CODICE, DESCR FROM CTE OPTION (MAXRECURSION 10000)

Finally, let's create the two Stored procedures below that we will execute to analyze their behavior.

// Write in Temp Table

CREATE PROCEDURE SP_TEMPART_# AS

BEGIN

CREATE TABLE #ARTICO (CODICE VARCHAR(80) ,COLORE VARCHAR(80) , POSIZIONE VARCHAR(80))

INSERT INTO #ARTICO(CODICE,COLORE, POSIZIONE)

SELECT TOP 10 CODICE,COLORE,POSIZIONE FROM ARTICOLI

END

// Write in Table Variable

CREATE PROCEDURE SP_TEMPART_@ AS

BEGIN

DECLARE @ARTICO TABLE (CODICE VARCHAR(80), COLORE VARCHAR(80), POSIZIONE VARCHAR(80))

INSERT INTO @ARTICO(CODICE,COLORE,POSIZIONE)

SELECT TOP 10 CODICE,COLORE,POSIZIONE FROM ARTICOLI

END

Now that our environment is ready let's start to see what happens below the scenes!

temporary table

Let's reset the transaction log and execute the first of the two stored procedures:

CHECKPOINT EXEC TEST_TEMP_TABLE..SP_TEMPART_#

SELECT DESCRIPTION,* FROM FN_DBLOG(NULL,NULL)

Are you ready to analyze it? follow me..

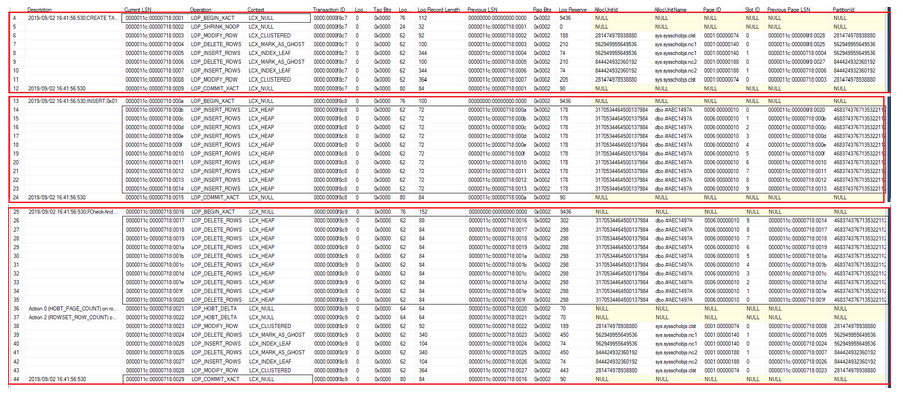

Look at all the operations that SQL Server performs.

I have divided the log into three blocks to make it more readable.

In the first block we see that a table is created and materialized in the TEMPDB database.

In the second block the rows inside the newly created table are inserted.

Here I have a row of type LOP_INSERT_ROWS for each row inserted in the temporary table (in our case 10 rows).

Finally, in the third block, the temporary table is first emptied and then deleted.

I first see a row of LOP_DELETE_ROWS for each row I delete from the temp table (in our case 10)

Then if it sees the deletion of the table itself.

To estimate “the weight of our operation” we only count here the number of LOP operations (LOP means L OGGED OP ERATION) performed by SQL Server.

We will not go into analyzing them one by one in detail because this is not the purpose of the article.

Assuming to insert N rows in the temporary table I will have a cost of:

LOP NUMBER = (CREATE TEMP TABLE) + (INSERT ROWS) + [(DELETE ROWS) + (DELETE TEMP TABLE)] = (9) + (N + 2) + [(N + 2) + (8)] = 2 * N + 21 = 41 LOP with N = 10 and table with 3 columns

Now we repeat the same analysis for the second stored procedure.

Table Variables

Then, we reset the transaction log again, execute the stored procedure and query our log.

CHECKPOINT EXEC TEST_TEMP_TABLE..SP_TEMPART_@ SELECT DESCRIPTION,* FROM FN_DBLOG(NULL,NULL)

Here it is!

What do we notice at first sight?

We immediately see that the log contains fewer lines (in our case 26 instead of 41).

And what is missing?

All the creation part of the temporary table in the TEMPDB is missing.

So what we said at the beginning of our article is correct: actually the table is in memory and not on the database.

Assuming always to insert N rows in the temporary table I will have a cost of:

LOP NUMBER = (INSERT LINES) + (DELETE LINES) = (N + 2) + (N + 4) = 2 * N + 6 = 26 LOP with N = 10 and table with 3 columns

Performance and waiting statistics comparison

| To do this we will use a tool that Microsoft makes available for free and which is called RML UTILS. We will not explain here how it works: we will only say that this tool allows the execution of a T-SQL statement or a Stored procedure in parallel thus generating a workload. In practice, we call an executable called OStress.exe to which some parameters must be passed. OStress.exe –i <FILE_NAME_WITH_SQL_EXECUTE> -U <USER> –P <PASSWORD> –S <SERVERNAME> -d <> –o <LOG_FOLDER> -n <X> -r <Y> To remember: The parameter "-i <FILE_NAME_WITH_SQL_EXECUTE>" where <file> is a file that contains the text of the T-SQL or SP command to execute The “-n <X>” parameter where the specified X value is the number of concurrent executions of our procedure. The “-r <Y>” parameter where the specified Y value is the number of times concurrent execution is performed. |

To analyze the performance we will only evaluate the execution time (parameters n = 400 and r = 20)

To analyze the types of wait statistics (the WAIT STATS), it will be necessary, before execution, to reset them using the command:

DBCC SQLPERF ('sys .dm_os_wait_stats', CLEAR);

Let's test the insertion in the temporary table (#)

Type the command CMD.EXE

Type the following command to execute the SP_TEMPART_# stored procedure in parallel on 400 Threads repeating everything for 20 times

OStress.exe -ic: \OStress\SP_test_temptable\testtemptable_#.SQL -UAdmin -PPwd -SSERVER -dtest_temp_table -oc:\ostress\SP_test_temptable -n400 -r20

We get an execution time of 6.276 seconds

Let's test the insertion in the table variable (@)

Results:

We get an execution time of 5.496 seconds.

Now let's look at the wait statistics.

What differences do we find?

The execution time is shorter but above all in the wait statistics we do not have PAGELATCH_XX and LATCH_XX type wait types.

Which type of support table is best to use?

When the number of records that we need to insert in the temporary table are a limited number, it is preferable to use the table variables (@).The execution is slightly faster and we therefore avoid generating PAGELATCH type waiting types.

If, on the other hand, there is a large number of data to be inserted, you can orient yourself on the temporary table (#)

In this case we can add an index to access the data more quickly.

In fact, remember that table variables do not support indexing for obvious reasons.

That's all for today! I hope you enjoed the post!

Luca Biondi @ SQLServerPerformance blog!

Previous post: Last attempt to access SQL and the "Dedicated Admin Connection" (DAC)