With today we conclude this series of post related to the last page insert contention issue speaking of a new feature introduced with SQL Server 2019.

This new feature is called OPTIMIZE_FOR_SEQUENTIAL_KEY and it is intended to reduce the latch contention. It is actually a clause that you can specify when creating an index .

First we will see how it works then we will do some tests

Are you ready? Go!

The OPTIMIZE_FOR_SEQUENTIAL_KEY feature

In order to use this feature you need to add the OPTIMIZE FOR SEQUENTIAL KEY = ON to the definition of the index:

Just remember to set the compatibility level to 15, which is the native SQL Server 2019 level.

Yes but how does this clause work internally?

I think this part of the theory is very interesting and I am happy to tell it. As usually I'll try to be as clear as possible.

Let's deepen!

Let's say that Microsoft did not want to make significant changes to the database engine through this clause, rather it focused on understanding the logic behind the memory page contention mechanism.

What we have already understood is that the pagelatch contention phenomenon introduces latency into the application. But this is inevitable since only one worker thread can access the memory page at a time.

However, what actually reduces the scalability of the application is not so much the contention of latches but another phenomenon that Microsoft calls LATCH CONVOY. We could translate this term with "convoy of latches" in the sense of many Latches moving one behind the other in queue.

Now let's put ourselves in this example.

Imagine a line of Latches moving in line one after the other. We think this Latch tail is like us in our car.

Poetically :-) ... Like on the busy highway in August, everything runs smoothly as long as the traffic (and therefore all of us) moves at the same speed.

But suppose we run into some work in progress where our two beautiful lanes suddenly become one.

Two things happen:

This is why a bottleneck causes a noticeable slowdown.

By analogy, in the same way, returning to our SQL Server this is why a bottleneck causes a noticeable drop in throughput.

If something slows down the execution of one of our threads and this holds its latch for a longer time, all the remaining latches will match and the throughput will drop dramatically.

But what could be slowing down the execution of one of our threads?

Typically in our case the slowdown is due to a phenomenon called Page Split.

Page Split occurs when a memory page gets completely filled and a new one has to be added to the index .

Furthermore, adding a new page in memory requires an exclusive type (EX) latch on the parent page and this can in turn cause a Latch request at that level.

And here is the idea behind the creation of the OPTIMIZE_FOR_SEQUENTIAL_KEY clause:

CREATE CLUSTERED INDEX [IDX_ARTICO_CLUSTERED_ID] ON [dbo].[ARTICO] ( [ID] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, SORT_IN_TEMPDB = OFF, DROP_EXISTING = OFF, ONLINE = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON, OPTIMIZE_FOR_SEQUENTIAL_KEY = ON) ON [PRIMARY] Just remember to set the compatibility level to 15, which is the native SQL Server 2019 level.

The magic behind the movie!

Yes but how does this clause work internally?

I think this part of the theory is very interesting and I am happy to tell it. As usually I'll try to be as clear as possible.

Let's deepen!

Let's say that Microsoft did not want to make significant changes to the database engine through this clause, rather it focused on understanding the logic behind the memory page contention mechanism.

What we have already understood is that the pagelatch contention phenomenon introduces latency into the application. But this is inevitable since only one worker thread can access the memory page at a time.

However, what actually reduces the scalability of the application is not so much the contention of latches but another phenomenon that Microsoft calls LATCH CONVOY.

Now let's put ourselves in this example.

Imagine a line of Latches moving in line one after the other. We think this Latch tail is like us in our car.

Poetically :-) ... Like on the busy highway in August, everything runs smoothly as long as the traffic (and therefore all of us) moves at the same speed.

But suppose we run into some work in progress where our two beautiful lanes suddenly become one.

Two things happen:

- All cars will be forced to travel the remaining lane at a lower speed.

- Before the traffic bottleneck a queue will form and increase until the bottleneck is removed.

This is why a bottleneck causes a noticeable slowdown.

If something slows down the execution of one of our threads and this holds its latch for a longer time, all the remaining latches will match and the throughput will drop dramatically.

But what could be slowing down the execution of one of our threads?

Typically in our case the slowdown is due to a phenomenon called Page Split.

Page Split occurs when a memory page gets completely filled and a new one has to be added to the index .

Furthermore, adding a new page in memory requires an exclusive type (EX) latch on the parent page and this can in turn cause a Latch request at that level.

And here is the idea behind the creation of the OPTIMIZE_FOR_SEQUENTIAL_KEY clause:

- Check the rate at which new threads are allowed to request latch

- Favor threads that can keep througput high.

In fact, limiting the execution of a thread means that, having this thread individually a higher latency, it is possible to reduce or avoid the phenomenon of thread queuing (convoy).

This allows all other threads to proceed faster.

Similarly favoring threads that can complete their execution in the amount of time that the scheduler allots them (4 ms) increases the througput.

In practice, as we have seen from our test, we will not see the PAGELATCH type waits drop, but we will see a new type of wait type called BTREE_INSERT_FLOW_CONTROL: It must be said, however, that if you are not subject to the thread queuing phenomenon (Convoy), you will not you will see big benefits. You may even experience a small performance degradation due to the additional flow control logic.

Let's say that we can consider this clause as an extra card to play in the event that we find ourselves in a position to experience a particularly high latch contention.

Very good! We have therefore proved that this new clause has an effect.

Effects that are all worth trying.

In fact, keep in mind that Microsoft itself recommends its activation only if there is really a problem of last page insert latch contention.

Failure to do so could even lead to a loss of performance.

That's all for today! I hope yuo enjoyed the post, if so.... Stay Tuned!

This allows all other threads to proceed faster.

Similarly favoring threads that can complete their execution in the amount of time that the scheduler allots them (4 ms) increases the througput.

In practice, as we have seen from our test, we will not see the PAGELATCH type waits drop, but we will see a new type of wait type called BTREE_INSERT_FLOW_CONTROL: It must be said, however, that if you are not subject to the thread queuing phenomenon (Convoy), you will not you will see big benefits. You may even experience a small performance degradation due to the additional flow control logic.

Let's say that we can consider this clause as an extra card to play in the event that we find ourselves in a position to experience a particularly high latch contention.

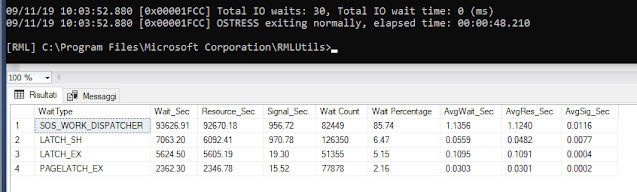

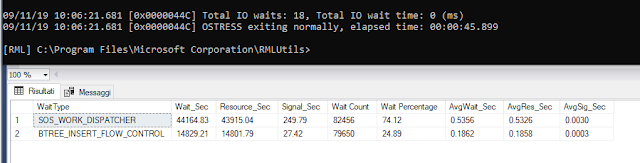

Now we drop the clustered index and reinsert it using this syntax:

CREATE CLUSTERED INDEX [IDX_ARTICO_CLUSTERED_ID] ON [dbo].[ARTICO] ( [ID] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, SORT_IN_TEMPDB = OFF, DROP_EXISTING = OFF, ONLINE = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON, OPTIMIZE_FOR_SEQUENTIAL_KEY = ON) ON [PRIMARY] These are the results with the OPTIMIZE_FOR_SEQUENTIAL_KEY clause ON

Well, tell me what you notice right away.

The execution time has been reduced from 48 to 45 seconds!

Then? Wait types LATCH_SH, LATCH_EX and PAGELATCH_EX are no longer present.

In their place a new type of wait state called BTREE_INSERT_FLOW_DISPATCHER appeared.

Effects that are all worth trying.

In fact, keep in mind that Microsoft itself recommends its activation only if there is really a problem of last page insert latch contention.

Failure to do so could even lead to a loss of performance.

So the advice is to check if you actually have this type of problem and then, only afterwards, carry out a test with and without this clause activated!

Luca Biondi @ SQLServerPerformance blog 2021!Next post: