Hi guys,

September is coming and it is time for our editorial staff to reopen its doors. I hope you have recharged your batteries to 100%.

Today we will talk about statistics and performance. Yes, we have talked about it many times before but today we will focus on an issue that could lead to a poor performance situation.

Maybe your query is poor performing due to this issue?

Ready? Go!

Statististics and the Ascending Key Problem

Statistics need to be updated because they help SQL server create a good execution plan. I like to do an example by making a parallel between extracting data from the database and a trip: if i have to go to work and my office is near me it is better to take a bicycle. If my work is far from me it is better take the car or the train.

Likewise, if I need to extract a few records, the optimizer can implement a JOIN by choosing a nested loop. Otherwise it would be better to use other operators such as merge or hash.

This is why statistics are important. Because updating statistics is a time-consuming process, statistics are not updated with every change made to a table.

The problem arises when you have a table with a clustered index.

So, suppose we have a table with a few million rows.

Now, for example, let's develop a procedure that inserts a few hundred rows.

Statistics are not updated because the number of rows inserted is lower than the threshold used by SQL Server.

What happens?

It happens that the estimated cardinality is 1 and not the correct one of a few hundred.

This problem could lead to the creation of a not good execution plan.

There are some traceflags to change the threshold used by SQL Server and starting with SQL Server 2016 onwards things are changed again.

But this post was written to clarify a logic that could lead to a poor performance situation.

As usual, a good example is the best way to understand how things work!

Example

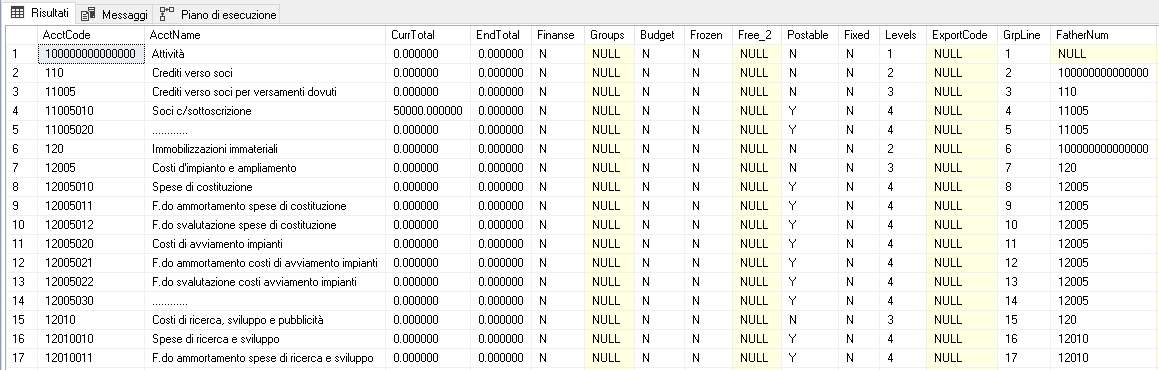

Let's take as an example a table of the SAP Business One Erp2 (This ERP supports two different database engines: SQL Server and the proprietary in memory rdbms SAP HANA )

We choose for example the table OACT.

The table OACT has a clustered index on the AcctCode field:

Executing the command

DBCC SHOW_STATISTICS('OACT', OACT_PRIMARY)

We can see that statistics are updated to 8th of January 2021

This table has 568 rows.

Let's now insert 50 Rows (50/568 equal to 8%)

INSERT INTO [dbo].[oact]

([acctcode],

[acctname],

[currtotal],

[endtotal],

[finanse],

[groups],

[budget],

[frozen],

[free_2],

[postable],

[fixed],

[levels],

[exportcode],

[grpline],

[fathernum],

[accntntcod],

[cashbox],

[groupmask],

[ratetrans],

[taxincome],

[exmincome],

[extrmatch],

[intrmatch],

[acttype],

[transfered],

[blnctrnsfr],

[overtype],

[overcode],

[sysmatch],

[prevyear],

[actcurr],

[ratedifact],

[systotal],

[fctotal],

[protected],

[realacct],

[advance],

[createdate],

[updatedate],

[frgnname],

[details],

[extrasum],

[project],

[revalmatch],

[datasource],

[locmth],

[mthcounter],

[bnkcounter],

[usersign],

[locmantran],

[loginstanc],

[objtype],

[validfor],

[validfrom],

[validto],

[validcomm],

[frozenfor],

[frozenfrom],

[frozento],

[frozencomm],

[counter],

[segment_0],

[segment_1],

[segment_2],

[segment_3],

[segment_4],

[segment_5],

[segment_6],

[segment_7],

[segment_8],

[segment_9],

[formatcode],

[cfwrlvnt],

[exchrate],

[revalacct],

[lastrevbal],

[lastrevdat],

[dfltvat],

[vatchange],

[category],

[transcode],

[overcode5],

[overcode2],

[overcode3],

[overcode4],

[dflttax],

[taxpostacc],

[acctstrle],

[meaunit],

[baldirect],

[usersign2],

[plnglevel],

[multilink],

[prjrelvnt],

[dim1relvnt],

[dim2relvnt],

[dim3relvnt],

[dim4relvnt],

[dim5relvnt],

[accrualtyp],

[datevacct],

[datevautoa],

[datevfirst],

[snapshotid],

[pcn874rpt],

[scadjust],

[bplid],

[bplname],

[subledgern],

[vatregnum],

[actid],

[closingacc],

[purpcode],

[refcode],

[blocmanpos],

[priacccode],

[cstacconly],

[allowefrom],

[allowedto],

[balancea],

[rmrktmpt],

[cemrelvnt],

[cemcode],

[stdactcode],

[taxoncode],

[inclasstyp],

[inclasscat],

[exclasstyp],

[exclasscat])

SELECT TOP 50 'Z' + LEFT(Ltrim([acctcode]), 14),

[acctname],

[currtotal],

[endtotal],

[finanse],

[groups],

[budget],

[frozen],

[free_2],

[postable],

[fixed],

[levels],

[exportcode],

[grpline],

[fathernum],

[accntntcod],

[cashbox],

[groupmask],

[ratetrans],

[taxincome],

[exmincome],

[extrmatch],

[intrmatch],

[acttype],

[transfered],

[blnctrnsfr],

[overtype],

[overcode],

[sysmatch],

[prevyear],

[actcurr],

[ratedifact],

[systotal],

[fctotal],

[protected],

[realacct],

[advance],

[createdate],

[updatedate],

[frgnname],

[details],

[extrasum],

[project],

[revalmatch],

[datasource],

[locmth],

[mthcounter],

[bnkcounter],

[usersign],

[locmantran],

[loginstanc],

[objtype],

[validfor],

[validfrom],

[validto],

[validcomm],

[frozenfor],

[frozenfrom],

[frozento],

[frozencomm],

[counter],

[segment_0],

[segment_1],

[segment_2],

[segment_3],

[segment_4],

[segment_5],

[segment_6],

[segment_7],

[segment_8],

[segment_9],

[formatcode],

[cfwrlvnt],

[exchrate],

[revalacct],

[lastrevbal],

[lastrevdat],

[dfltvat],

[vatchange],

[category],

[transcode],

[overcode5],

[overcode2],

[overcode3],

[overcode4],

[dflttax],

[taxpostacc],

[acctstrle],

[meaunit],

[baldirect],

[usersign2],

[plnglevel],

[multilink],

[prjrelvnt],

[dim1relvnt],

[dim2relvnt],

[dim3relvnt],

[dim4relvnt],

[dim5relvnt],

[accrualtyp],

[datevacct],

[datevautoa],

[datevfirst],

[snapshotid],

[pcn874rpt],

[scadjust],

[bplid],

[bplname],

[subledgern],

[vatregnum],

'Z' + LEFT(Ltrim([acctcode]), 14) AS [ActId],

[closingacc],

[purpcode],

[refcode],

[blocmanpos],

[priacccode],

[cstacconly],

[allowefrom],

[allowedto],

[balancea],

[rmrktmpt],

[cemrelvnt],

[cemcode],

[stdactcode],

[taxoncode],

[inclasstyp],

[inclasscat],

[exclasstyp],

[exclasscat]

FROM oact

WHERE acctcode <> '100000000000000'

To verify that statistics are not changed type again the command:

DBCC SHOW_STATISTICS('OACT', OACT_PRIMARY)

Now type the command

SELECT * FROM OACT where AcctCode >= 'A00000000000000'

While 51 rows are returned watching to the property we can clearly see that the estimated number of lines to read is 1.

This is the problem that could lead to the creation of an not optimal execution plan!

Using the Traceflag 2371

Starting from SQL Server 2016 this behaviour is enabled by default.

This is a summary:

| Table type | Table cardinality | Up to SQL2014 (12.x) Recompilation threshold (# modifications) or TF 2371 | From SQL2016 (13.x) Recompilation threshold (# modifications) |

|---|---|---|---|

| Temporary | n<6 td=""> | 6 | 6 |

| Temporary | 6<=n<=500 | 500 | 500 |

| Permanent | n<=500 | 500 | 500 |

| Temporary or Permanent | n>=500 | 500 + (0.20 * n) | Min between 500 + (0.20 * n) and SQRT(1000 + n) |

I hope you found the reading interesting and if so follow me!

Luca Biondi @ SQLServerPerformance blog 2019-2021